In the rapidly evolving landscape of artificial intelligence, accessing powerful language models is no longer the sole domain of large corporations with substantial budgets. The Gemini Pro API, through Google’s AI Studio, has democratized access to some of the most advanced models available today. We are here to provide a meticulous, step-by-step guide on leveraging this incredible technology on a Pay As You Go basis, ensuring you can build and innovate without the burden of a significant upfront commitment. This article will not only detail the necessary setup. Still, it will also delve into strategic best practices, model selection, and an in-depth look at the technical specifications that empower your projects.

We understand that the ability to experiment and scale on demand is paramount for developers, researchers, and innovators. The Pay As You Go model perfectly fits this paradigm, allowing you to pay only for the compute and usage you consume. This means no hidden fees, required subscriptions, and complete control over your budget. By following our comprehensive instructions, you will gain access to a suite of powerful models, including the most recent Gemini iterations, and learn how to navigate the Google Cloud ecosystem confidently.

Part 1: The Essential Setup – Enabling Pay As You Go Billing

The journey to harnessing the full power of the Gemini Pro API begins with a crucial step: enabling a billing account in the Google Cloud Console. While Google AI Studio provides a seamless interface for development and prototyping, the full range of paid-tier features, including higher rate limits and access to specialized models, is unlocked through a linked Google Cloud project with active billing. This process is designed to be straightforward, but attention to detail is key.

Step 1: Initiating the Billing Upgrade within Google AI Studio

Navigate to the Google AI Studio interface. Within your project’s settings or the API keys management section, you will locate a button or link labeled “Set up Billing” or “Upgrade.” The wording will depend on whether your project is on the free tier or a legacy plan. Clicking this button is the catalyst for the entire process. It is a direct, one-click gateway that redirects you to the Google Cloud Console, where the actual billing account creation and linking will occur.

Step 2: Linking a Billing Account in Google Cloud Console

The Google Cloud Console will guide you through the Cloud Billing process upon redirection. You will select an existing billing account to link to your project or create a new one. A billing account is simply a payment profile that will be charged for your API usage. It is essential to have a valid payment method, such as a credit card, linked to this account. While this step involves providing payment information, the Pay As You Go model ensures you are only billed for what you use, providing an immediate and transparent cost structure.

Step 3: Completing the Billing Setup

Follow the on-screen instructions in the Cloud Console to finalize the setup. This may involve confirming your country of residence, agreeing to the terms of service, and verifying your payment method. Once this is complete, the billing account will be successfully linked to your Google Cloud project. You will receive a confirmation, and within minutes, the benefits of the paid tier will be unlocked in Google AI Studio. This includes using the most advanced models and significantly higher rate limits, allowing for more substantial, production-level applications and experiments.

Part 2: Navigating Google AI Studio and Model Selection

With billing enabled, the Google AI Studio becomes a far more powerful and versatile tool. The interface is your command center for managing API keys, monitoring your usage, and, most importantly, choosing the optimal model for your specific task.

Accessing and Utilizing the Studio Interface

Your primary workspace is the Google AI Studio. Log in with the same Google account that has the billing-enabled project. The interface is intuitive, offering a variety of tools for prompt engineering, chat-based applications, and code generation. We recommend familiarizing ourselves with the various playgrounds, which allow you to test prompts and system instructions in a real-time environment. This is where you can experiment with the different models and their capabilities before integrating them into a larger application. The ability to monitor your API usage directly within the studio or in the detailed dashboards of the Google Cloud Console is invaluable for keeping track of costs and optimizing your calls.

Strategic Model Selection for Optimal Performance and Cost

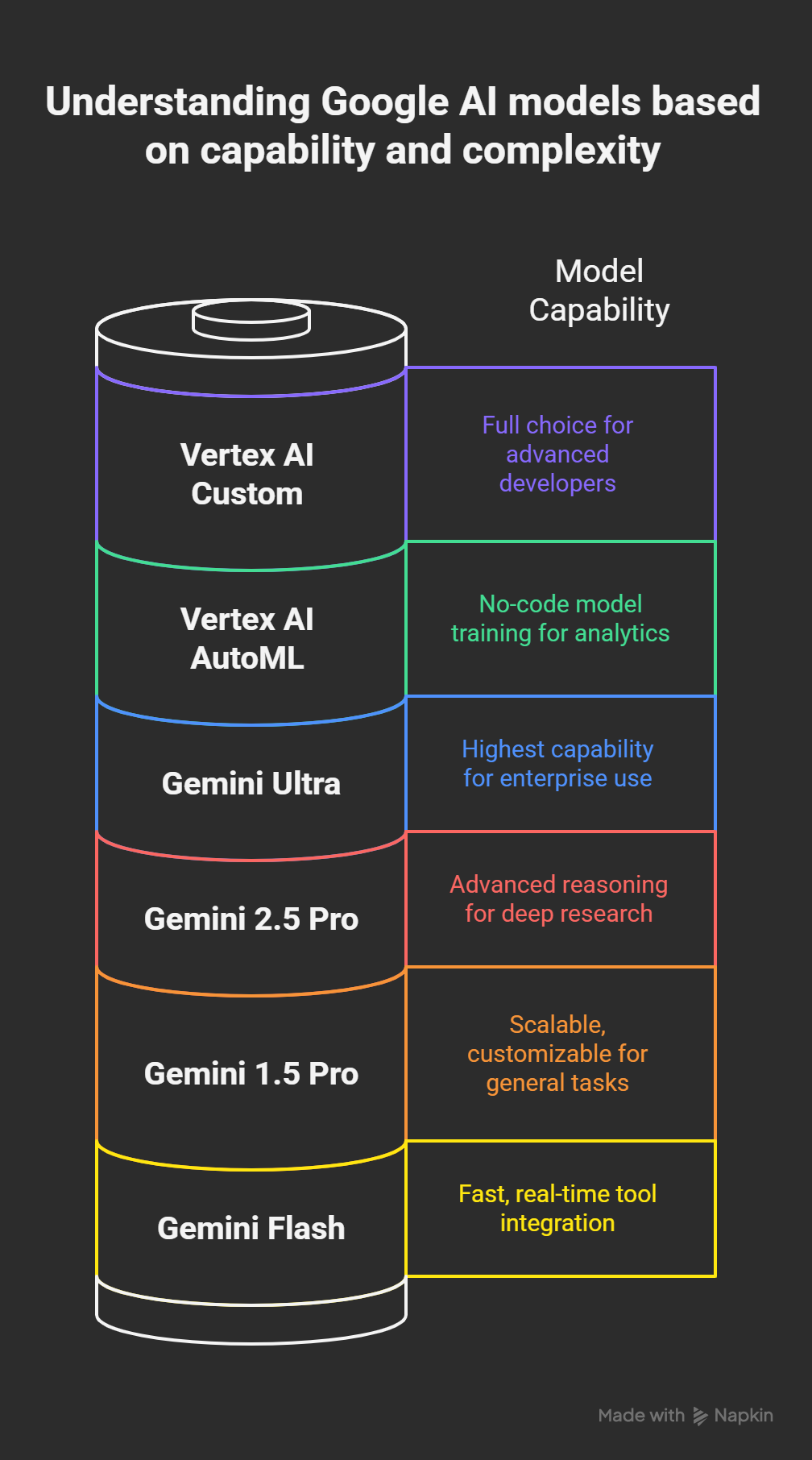

One of the most powerful features of the Gemini ecosystem is the diversity of models available, each with a specific purpose and set of strengths. The Pay As You Go model allows you to switch between these models seamlessly, allowing for a highly optimized workflow.

- Gemini 2.5 Pro: This is the flagship model, our most advanced reasoning model to date. It is engineered to handle the most complex tasks, including deep research, intricate mathematical problems, and sophisticated coding challenges. Its immense context window and advanced capabilities make it the ideal choice for projects with the highest accuracy and nuanced understanding. When your application requires state-of-the-art performance and the ability to process vast amounts of information, Gemini 2.5 Pro is the definitive choice. Its context window of 2 million tokens is a game-changer, enabling it to analyze large datasets, extensive codebases, and long-form documents with unparalleled precision.

- Gemini 1.5 Pro: This model offers a fantastic balance of capability and efficiency. While it may not have the absolute top-tier performance of its newer counterpart, it remains exceptionally powerful. It is often the perfect model for a wide range of general-purpose tasks. Its generous input token limit of 1,048,576 and a robust output token limit of 8,192 make it a workhorse for applications requiring comprehensive context processing without the absolute bleeding edge of reasoning. The ability to process multiple images and video inputs adds a powerful multimodal dimension to this model.

Gemini Flash (and its variants): The Gemini Flash series of models is meticulously designed for speed and efficiency. These models are the best choice when latency and throughput are your primary concerns. They excel at real-time applications, low-latency interactions, and high-volume tasks where a quick, accurate response is more critical than deep, complex reasoning. The multimodal integration and speed of Gemini Flash make it an excellent choice for building efficient LLM agents, chatbots, and any application where the user experience is dependent on rapid response times.

Part 3: Understanding Technical Specifications and Maximizing Value

To truly master the Gemini Pro API, we must deeply understand the technical specifications that govern each model’s behavior and capabilities. This knowledge empowers us to make informed decisions that optimize performance and cost.

Diving Deep into Token and Data Limits

The concept of “tokens” is central to understanding usage and cost. A token can be a word, a part of a word, or even a punctuation mark. The limits provided for each model, such as the 1,048,576 input token limit for Gemini 1.5 Pro, define the maximum amount of information (text, images, or code) that can be processed in a single prompt. The output token limit dictates the maximum length of the model’s response. It is crucial to be mindful of these limits to avoid truncation of your prompts or responses.

Multimodal Capabilities: Beyond Text

The Gemini models are not limited to text-based interactions. The ability to process various data types is a core feature. With models like Gemini 1.5 Pro, you can include up to 3,600 images per prompt, video, and audio. This opens up a world of possibilities for applications in computer vision, video analysis, and automated transcription. We encourage you to explore these multimodal features to create innovative and data-rich applications. For example, you could provide a video clip and ask the model to summarize the key events, or give a series of images and ask it to describe the story they tell.

Advanced Features: Instructions and JSON Mode

The Gemini Pro API supports system instructions and JSON mode for fine-grained control over model behavior. System instructions allow you to set the persona, tone, and overall behavior of the model, ensuring consistent and high-quality responses. JSON mode is an invaluable tool for developers who require a structured, machine-readable output. This feature forces the model to generate its response in a valid JSON format, making it far easier to parse and integrate into your applications. These advanced features are essential for building reliable and predictable AI-powered systems.

Wrap Up: Building the Future with Confidence

The Gemini Pro API on a Pay As You Go basis is a powerful and accessible pathway to building sophisticated, AI-driven applications. By following this comprehensive guide, we can confidently navigate the setup process, strategically select the best model for any given task, and leverage the full technical capabilities of the Gemini ecosystem. The combination of flexible billing, a rich variety of models, and advanced features provides an unparalleled foundation for innovation.

We are committed to empowering creators and developers by providing the most detailed and actionable information available, enabling you to build the next generation of intelligent applications with speed, efficiency, and complete control. The future of AI development is here, and we are ready to make it together, one meticulously crafted prompt at a time.

Selva Ganesh is a Computer Science Engineer, Android Developer, and Tech Enthusiast. As the Chief Editor of this blog, he brings over 10 years of experience in Android development and professional blogging. He has completed multiple courses under the Google News Initiative, enhancing his expertise in digital journalism and content accuracy. Selva also manages Android Infotech, a globally recognized platform known for its practical, solution-focused articles that help users resolve Android-related issues.

Leave a Reply